0755-83687076

Author: admin Time:2021-12-16 Click:

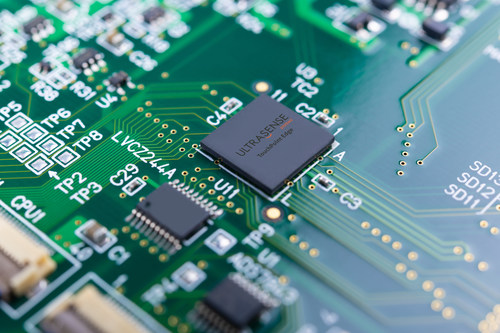

According to foreign media reports, UltraSense Systems, a company specializing in ultrasonic sensors, has announced the launch of its next-generation multimodal touch sensing solution, TouchPoint Edge. This solution aims to replace a set of mechanical buttons beneath various surface materials (such as metal, glass, plastic, etc.) at a lower cost and further drive the UI/UX paradigm shift from mechanical to digital interfaces for smart surfaces.

TouchPoint Edge is a fully integrated system-on-chip (SoC) that can replicate the touch input of mechanical buttons by directly sensing eight independent UltraSense ultrasonic + force-sensitive TouchPoint P sensors. The system also utilizes an embedded, real-time neural touch engine (NTE) to distinguish between intentional and unintentional touches, eliminating extreme cases and providing the input accuracy of mechanical buttons.

(Image Source: UltraSense Systems)

In the coming years, smart surfaces will further change the way humans interact with products. Smart surfaces refer to solid surfaces with backlighting that display the location of touch to the user. This UI/UX paradigm shift began more than a decade ago when smartphones replaced mechanical keyboards with taps on capacitive screens. However, not all smart surfaces are capacitive displays. UltraSense System's first-generation sensor, TouchPoint Z, further improved the user experience (UX) of smartphones, electric toothbrushes, household appliances, and car interior dome lights by economically and efficiently removing mechanical buttons, marking another paradigm shift.

TouchPoint Edge significantly enhances the user experience for applications with numerous mechanical buttons. For example, it removes multiple mechanical buttons in a car cabin, including steering wheels, HVAC and lighting central and overhead consoles, door panels for seat and window controls, and even embedded in leather soft surfaces or foam seats, creating a more user-friendly interface. Other applications include appliance touch panels, smart locks, security access control panels, elevator button panels, etc.

The human-machine interface is highly subjective and complex under solid surfaces, unable to replicate the press of mechanical buttons. It involves more than just applying force greater than a threshold to trigger surface pressure. When users apply force to mechanical buttons, this force changes over time due to friction, hysteresis, air gaps, and spring characteristics, causing numerous nonlinear reactions. Therefore, simple piezoresistive or MEMS force-touch strain sensors with some algorithms and one or two-level trigger thresholds cannot effectively and accurately reproduce the mechanical button user experience while eliminating misfires.

TouchPoint Edge features multimodal sensing and an embedded neural touch engine capable of processing on-chip machine learning and neural network algorithms, thus understanding user intent. Like TouchPoint Z, TouchPoint Edge can capture unique patterns of users pressing on surface materials. This dataset then trains neural networks to learn and distinguish users' pressing patterns, differing from traditional algorithms that accept a single force threshold. Once TouchPoint Edge is trained and optimized for users' pressing patterns, it can identify the most natural response to button presses. Additionally, TouchPoint P transducer's unique sensor array design captures unique multichannel datasets in smaller localized areas like placing mechanical buttons, greatly enhancing neural network performance in replicating button presses. This neural touch engine improves and enhances the user experience and, combined with TouchPoint P's proprietary sensor design, provides optimal performance. Integrating this neural touch engine into TouchPoint Edge significantly boosts system efficiency. Compared to offloading the same system setup to an external ultra-low-power microcontroller, the execution speed of neural processing in TouchPoint Edge increases by 27 times while reducing power consumption by 80%.

Key features of TouchPoint Edge include a neural touch engine for processing machine learning and convolutional neural networks; an open interface allowing the neural touch engine to process non-proprietary and even non-touch sensor inputs (e.g., inertial, piezoelectric, positional, force, etc.); support for directly driving and sensing eight multimodal TouchPoint P independent sensors; embedded MCU and ALU for algorithm processing and sensor post-processing; integrated analog front end (AFE); configurable power management and frame rates; I2C and UART serial interfaces; two GPIOs for direct connection to haptics, LEDs, PMICs, etc.; operating range of -40°C to +105°C; packaged in a 3.5mm x 3.5mm x 0.49mm WLCSP.

Key features of TouchPoint P include multimodal independent piezoelectric transducers for ultrasonic + strain sensing; operating range of -40°C to +105°C; packaged in a 2.6mm x 1.4mm x 0.49mm QFN.